I agree with you that hate has no place in a healthy community, but addressing criticism where it is constructive is badly necessary for the success of any project. Whether it is the promised positive impact or the potential drawbacks that will dominate in the long term still seems like an open question to me, which can only be answered with more adequate economic modelling. Not a fan of pushing a remarkably complex change forward despite the potential red flags hoping that the (so far unquantified) costs will be negligible during production, even though I don’t doubt that the team behind this are doing it with their best intentions in mind.

I believe that you may be right that due to the smoothing effects of fees, we’ll see the low end of fees increase slightly just as we’ll see the high end of fees decrease slightly. I don’t expect this to have a large impact, as I think the algorithm adjusts fast enough that we’ll see a pretty limited tightening of the min/max fees over a particular period. If you want to provide a more complete argument on this point that would be cool!

Hi @mdalembert, you may be interested in these simulations (see also others here).

The gist is that we model first-price auction users (the current paradigm), then 1559 users, and observe how gas price oracles and user fees vary. It doesn’t exactly answer your question but it could be modified to. Yet there might be some insights from it that are applicable here.

Gas price oracles are basically noisy + laggy estimators of an invisible “market price”, that equalises supply and demand. The way oracles are modelled, they tend to be sticky because if everyone is overpaying, oracles will keep quoting high prices (reflexivity). That’s a known effect of these first-price auction type of systems, that you usually strategise based on what you assume of other users. (by the way EthGasStation has a bunch of checks to break this reflexivity as much as possible, but the point stands that estimation still depends on past users)

With 1559, you are quoted a market price, so usually you can either take it or leave it, no need to check what others are doing. When you have users who are underbidding (like LegacyUsers in the “Final boss” section of the notebook), they can still get in given the right circumstances (basefee needs to come down enough), but they should expect their wait time to be greater.

So I think Micah is right when he says that 1559 narrows the band: you have less users underpaying, but also less users overpaying.

This is also a good point and some work is done on formalising arguments to help set how fast/slow basefee changes should be. Realistically mainnet data will be necessary to see how the mechanism behaves in practice, and where we fall on that tradeoff. But I never really thought of it in terms of the tradeoff being more permissive to users who underbid, seems like an interesting thread to explore.

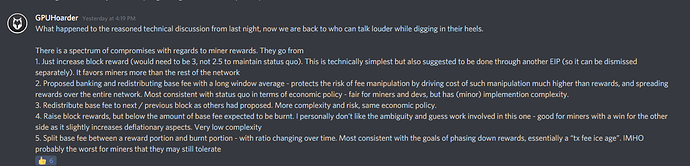

Here is the latest update from the discussions with miners in working towards a compromise in the 1559-miners discord. Below is a summary from GPUHoarder

There is a spectrum of compromises with regards to miner rewards. They go from

- Just increase block reward (would need to be 3, not 2.5 to maintain status quo). This is technically simplest but also suggested to be done through another EIP (so it can be dismissed separately). It favors miners more than the rest of the network

- Proposed banking and redistributing base fee with a long window average - protects the risk of fee manipulation by driving cost of such manipulation much higher than rewards, and spreading rewards over the entire network. Most consistent with status quo in terms of economic policy - fair for miners and devs, but has (minor) implemention complexity.

- Redistribute base fee to next / previous block as others had proposed. More complexity and risk, same economic policy.

- Raise block rewards, but below the amount of base fee expected to be burnt. I personally don’t like the ambiguity and guess work involved in this one - good for miners with a win for the other side as it slightly increases deflationary aspects. Very low complexity

- Split base fee between a reward portion and burnt portion - with ratio changing over time. Most consistent with the goals of phasing down rewards, essentially a “tx fee ice age”. IMHO probably the worst for miners that they may still tolerate.

None of the proposed ideas are easy to implement and should have EIPs created for them so we can evaluate their technical and economic benefits to the entire Ethereum ecosystem. They can’t be included in EIP-1559 because most EIPs are designed to achieve only ONE thing.

You should create proper proposals & blogposts for them to be properly evaluated.

None of the proposed ideas are easy to implement and should have EIPs created for them so we can evaluate their technical and economic benefits to the entire Ethereum ecosystem.

Hi @spsky, this is untrue. Recommendation 1. is as easy as it can get.

source: Discord in the R&D Discord

This is probably the best.

I frankly think manipulation fears are grossly exaggerated.

The simplest starting point of transferring base fee to the miners instead of burning it is the best IMO.

If manipulation starts happening the algorithm can be tweaked to address it. Otherwise it seems like to address a problem that may not exist.

It has been always clear to me that the true reason for fee burning was to raise the token price. Just sift through twitter and see all excitement about ETH becoming a deflationary currency.

The community needs to find a compromise on this and many subjects. The best it to have a functioning democracy and vote, with all sides represented.

If developers start a war as Micah suggested in his post, miners can simply fork the network. There are many good software engineers miners could hire, and maintaining blockchain is not rocket science, frankly. Moreover, the current PoW chain can run 10+ times faster if someone starts investing in it.

It is a classic thing in game theory where each party brings even more powerful weapon to the table, and ultimately it only leads to escalation.

The right thing is to find a compromise, and I think Micah’s post was more like a war plan.

“Any censorship attack by miners against the interest of users will almost certainly result in the core developers taking very aggressive action against miners.”

I was born in the USSR and it reminds me very much how we

talked to americans. “Do something bad and we will nuke you guys!” ![]()

The truth is there are good people on both sides, but it is conflict psychology 101 that the other party is turned into an enemy and demonized.

Lets go back to Satoshi’s principles @MicahZoltu.

Blockchain was created as a way for people to make collective decisions and compromises. It is a tool for democracy, and is supposed to make conflicts addressable. ETH for long time did not have a formal governance model. The best resolution of this discussion IMO is to create a model that respects and balances all parties.

I am happy to do some legwork on implementation and create an EIP, but I would prefer not to create EIPs for each option. As such, gaining some sense of concensus that one or more options may gain traction would be nice.

My favorite is banking the fee and distributing it over a long rolling window as it maintains the same economic policy as the present while explicitly not disturbing the stated motivations of 1559.

Open questions would be the exact mechanism for doing this, as well as how many blocks long that rolling window should be. This will require some simulations and exploratory work.

Someone else can easily create a simple EIP proposing the block reward increase. I recommend at least some cursory analysis of expected future fees under 1559 and the net inflationary or deflationary effect of the recommended increase.

In my opinion raising the reward is deceptively technically simple but quite complex and nuanced in the range of effects it could have on economic aspects. The same can be said to some degree with implementing 1559 without mitigation, though the window of possible effects likely shifts towards deflationary.

Redistributing fees may require more technical work but the effects are very predictable and consistent.

1 and 4 seem fine as two stand alone EIPs. I have added an additional sentence to get around some sort of filter.

Do you have data to back it up?

Part of the goal is to accrue value to ETH. If SOME miners aren’t BULLISH on ETH accruing long-term value and won’t support one of the best proposals on ETH value accrual then why mine ETH in the first place.

Why should the Ethereum community at the large bend over backward for their interests?

The ETH value accrual is an important thing to the Ethereum ecosystem because it brings new investors, provides more funding for development, signals Ethereum as a proper platform to Institutions for development because more people will be more vested in ETH/Ethereum because it accrues value e.t.c

Hey - this is the first rule in software engineering. You do not solve problems that do not exist. The opposite is called overengineering.

You release first, and then iterate.

Well, one needs evidence for the word SOME. Evidence can only be created by vote. Maybe it is a LOT. Without a vote the argument is just aggression (there are many of us and few of you)

Miners are the only guys who actually did vote. The opposite party is using a very typical escalation of violence argument (if you do not agree, we will punish you).

In addition to being aggressive (I think Micah’s blog says “we need to aggressively …”), the argument is weak because miners can fork the network anytime and hire engineers to maintain it.

There is nothing rocket science about maintaining geth, lets be frank. It is a highly skilled software engineering job.

The problem with authoritarian systems based on power is that they are always better than democracy short term but always fail long term.

It is good miners had this vote, now we know who thinks what.

May be the core team carries out an anonymous vote to voice their opinion?

Anonymous is obviously the must because many people are employees, and the opinion may be against the employer’s opinion.

Do we have a proof that every single geth contributor supports the EIP. Why not let them express their opinion freely?

What ETH needs is a governance system where all parties are democratically represented and can vote. Otherwise, there is no conflict resolution.

Hey @barnabe, it should certainly be possible to modify some of those simulation notebooks in order to answer the questions I was asking. Unfortunately as-is they only seem to suggest that adopting the canonical EIP-1559 bidding strategy gives the user certain advantages over sticking to legacy transactions under the assumption that we do switch to EIP-1559 (and even that doesn’t seem 100% clear from your results since one can see legacy transactions using the low-cost gas oracle get a slightly lower price than EIP-1559-aware users, despite them being forced to pay for the base fee like anyone else).

In order to answer the question of whether EIP-1559 is going to improve things overall one would have to repeat the simulation without the imposition of a base fee, and then compare the results based on some more or less objective cost/benefit metric – And there is already quite some potential for disagreement trying to find the right metric, because users on a tight budget (like me ![]() ) are likely to place more weight on their average transaction cost, while novice users with a low risk tolerance are more likely to prefer more deterministic transaction confirmation times even if it comes at the cost of higher gas fees.

) are likely to place more weight on their average transaction cost, while novice users with a low risk tolerance are more likely to prefer more deterministic transaction confirmation times even if it comes at the cost of higher gas fees.

In addition it would be beneficial to simulate alternative scenarios to EIP-1559 (like the banking and moving-average redistribution of base fees other people have been requesting, which I agree would have the benefit of making the inflationary/deflationary behavior of the currency more deterministic). In particular I have a hunch that even a slight increase in the block gas limits would yield a greater improvement in average gas costs than this proposal, and a reduction in confirmation times that could get close to what this proposal achieves for most users thanks to the increased network throughput, simultaneously improving both metrics I was discussing above instead of trading one for the other.

Yep, that’s very true, but it’s also true that real gas oracles use algorithms substantially more sophisticated than what has been used in the simulations I’ve had the chance to read through so far. In order to obtain a fair comparison of this proposal vs. the status quo it seems necessary to model some of the mempool dependency of the current oracles at the very least, and I have the suspicion that that would already reduce the stickyness you have observed in your results to some extent (though I agree that it’s unlikely to fully disappear, but that might be acceptable if despite the increased stickiness users get lower transaction fees overall!).

Another interesting experiment to try out (roughly converse to your modelling of legacy users in an EIP-1559 network) would be to model the present protocol in combination with a less sticky gas oracle consisting of an off-chain implementation of the base fee, taking advantage of mempool information in order to make base fee adjustments instead of relying on the gas utilization of the double block. If that truly leads to a less reflexive gas price estimator users may have a personal incentive to opt in, avoiding the controversial aspect of imposing a base fee on everyone (However it would certainly increase the risk that those users would have to resubmit transactions compared to the present proposal).

Yeah. I think it would be beneficial to have some quantitative understanding of how optimal the selected averaging parameter actually is. Many people seem to think that this proposal will reduce the frequency of large gas price surges thanks to the doubled block size, and that it will improve the user experience by moving away from first-price auctions as pricing mechanism. What is not so clear in the various explanations of EIP-1559 is that there is a necessary trade-off between both of the promised advantages, that can be quantified with the averaging weight of the base fee. It might be challenging to find the right balance between both because, once again, more budget-oriented users are likely to benefit from a slower average while more risk-averse users are likely to prefer a faster average. Any idea what sort of metric the proposed value of this parameter was intended to optimize? (Assuming that it is the result of some sort of quantitative analysis)

These are excellent points, thank you!

So when legacy users do get a smaller price (compared to other 1559 users who appeared at the same time), it is at the cost of a longer delay, which I guess is fine if you don’t have time preferences and just want the transaction to get in, sooner or later. There is also an asymmetry in the behaviour of 1559 users, they are modelled as “take it or leave it”: either the current basefee allows their inclusion or they balk. But they could be modelled as sending their bid anyways, with maxfee lower than the current basefee, in which case I might expect the results would look closer to legacy users (they wait in the pool until basefee reduces and miners include them).

Yes this is the change that was on my mind. Right now the legacy users live in a 1559 environment, so it’s not a true comparison between the current paradigm and a 1559 future (but that wasn’t necessarily the point of the notebook, I was more interested in this effect of blending legacy and 1559 users in this new 1559 paradigm).

The metrics are hard but it’s also hard because there are a lot of factors to control for between the 1559 environment and the legacy environment. Answering “would I be better off in that scenario vs the other one” is probably possible in aggregate (like how I check the difference between expected and realised types of users included in blocks), but not individually (like “is user X better off bidding that way in legacy or the same way in 1559”). There is likely still interesting things to dig through.

Right, but this seems orthogonal to 1559. We assume block gas limits are constraints (weak ones, since miners can tune them up or down) and do our best to have a predictable fee market in these constraints. Maybe you are asking what is the net effect of having 1559 (with or without the banking/redistribution) and moving gas limits. One effect might have to do with the dynamic behaviour of basefee and its update rule. Taking the “control theory” view, having flexibility over the block gas limit gives an extra parameter to tune should basefee be oscillating for instance.

Agreed! In this notebook I was more interested to extract the “stylised fact” than faithful reproduction, I didn’t want (nor do I think it is possible) to say “1559 will decrease stickiness by 37%!”, but finding that tradeoff between average gas prices and stickiness (which you could get by taking reference from mempool for the oracles) sounds very possible (or at least isolate the factors are play)

Intuitively I want to believe that it’s not possible to will a basefee into existence without some sort of binding constraint. The fee burn ensures it is not open to manipulation for instance. I see how you could construct basefee from mempool data (basically you’d get a noisy estimation of the demand curve at T so you could infer the “market price” from it) but couldn’t it be easily gamed then? I don’t have an attack in mind but it feels like you are getting too much for free! Interesting…

We’d have to dig the historical records but my intuition is that the 1/8 value of the update rate parameter was chosen with respect to how long it takes for basefee to 2x or 3x (I know it’s sort of tautological…) But no one believes it is the “correct” parameter, or even the correct update rule. Roughgarden formalised the space of possible update rules and there are many variations to consider. One could even have some sort of control on the update rate itself (~gain scheduling). Empirical data would be useful to justify anything, so the plan seems rather to launch as is and decide on updates down the lane. Upstream we’ll be doing some theoretical work on how to think about these tradeoffs, so that it will be more of a “fit the data” problem hopefully.

Usually, people are required to provide evidence to prove a claim as opposed to dismissing a claim for a lack of evidence, but I’d point at the history of Eth so far. The closest you would get to manipulation is pools including their payout txns on blocks for 1 gwei, but that is a far cry from the idea of a consistent pressure caused by filling blocks with junk transactions.

Again, EIP-1559 is not trying to reduce gas prices. That is not a goal of this EIP. There are a number of efforts underway to reduce gas prices (increase supply of block space) but that is orthogonal to this EIP. If your goal is reduced gas costs, I encourage you to support and offer assistance to people building those solutions like rollups and sharding.

EIP-1559 is not trying to prevent large gas price surges. I don’t know who is thinking this, but please send them links to resources that help them better understand the purpose of EIP-1559 and what it solves!

Oh I hadn’t noticed this asymmetry it would be interesting to see if you get substantially different results without it.

Yeah, that’s certainly useful in its own right, it’s good to make sure that people will have an incentive to switch to a new system before it’s put into production. ![]()

I guess it might be possible to game it even though I can’t think of any attack vectors off the top of my head either. The perverse incentive to manipulate it seems somewhat reduced though since people would be able to stop using it if it starts returning garbage values at some point ![]() . Anyway I didn’t intend that as some sort of fail-proof oracle aiming to solve all of our gas estimation problems, but rather as a practical experiment in order to better understand whether the stickiness we observe in current gas price oracles is the result of some essential limitation of the underlying gas pricing mechanism or whether it’s something we could improve by coming up with better estimation algorithms.

. Anyway I didn’t intend that as some sort of fail-proof oracle aiming to solve all of our gas estimation problems, but rather as a practical experiment in order to better understand whether the stickiness we observe in current gas price oracles is the result of some essential limitation of the underlying gas pricing mechanism or whether it’s something we could improve by coming up with better estimation algorithms.

I feel like we keep talking past one another on this one… I understand that your final intention here is not to reduce gas prices, but the point I was trying to make in the paragraph you quoted is that it’s probably worth making some sort of quantitative comparison (in terms of whatever usability metric you do care about here, like variability in the transaction inclusion delay) between this proposal and a massively simpler one that simply increases the per-block gas supply by a small amount. If the latter gives you a comparable benefit and also reduces gas prices across the board maybe it’s worth doing just that by decree, since it doesn’t entail nearly as many risks as this proposal and it makes a larger share of the community better off. Right?

That’s fair. But whew, there is so much disinformation in this regard that I doubt I can dispel this myth on my own without the help of someone more influential from this community, more than half of the results I got when I searched the web as I first heard about this proposal hinted me in the wrong direction. Even Tim Roughgarden implies this will mitigate high transaction fees in section 3.2.2 of his article. And I think he’s right – With an appropriately chosen base fee averaging parameter.

If you dislike looking at the trade-off I was referring to as one between your ability to mitigate the variability of transaction fees and the usability benefit you’ve been arguing for (of being able to bid for your marginal utility), you can look at it roughly equivalently as a trade-off between reducing transaction delays (which according to @timbeiko’s “Why 1559?” seems to be an explicit goal) and the same usability benefit you were talking about: If the base fee averaging is so fast that it can go through the roof from one block to the next you’ve lost most of the promised elasticity benefit of double-size blocks: users may end up waiting unpredictable amounts of time even though they had set a cap that seemed just fine based on the demand they had just observed, or they may end up hugely overpaying if they had dared to set the cap according to their true marginal utility…

Increasing block size is a temporary solution as usage is likely to climb once gas prices decline, as usability attracts usage, and so even if we have a period of less-than-full blocks (gas prices at marginal opportunity cost for inclusion), it is likely that we would return to “competition for block space” at some point after that. Also, block size increases come with the rather large problem of state growth, which is a major problem with Ethereum right now. It could very easily be argued that a block size decrease would probably make sense for the long term health of Ethereum.

Check out A Tale of Two Pricing Schemes. A User Story | by Micah Zoltu | Coinmonks | Medium (the linked section specifically) on why gas price estimation is an inherently hard problem (it is chaotic). There certainly may be room for improvement, but I don’t believe the problem is fully solvable just via better oracles as even the best theoretic oracle we could build today would not be perfect due to the number of inputs into the system (all human users of the system at every moment in time).