During the PEEPandEIP discussion today on the gas limit, @vitalikbuterin suggested that moving to long-form might be valuable for more complete arguments.

Below is a short summary of the discussion (H/T @poojaranjan!), and what I see as the open-ended questions.

Quick Summary

Vitalik mostly covered the technical limitations for increasing the gas limit, as he previously articulated so well in his post.

The most interesting points Vitalik brought up, which are worth reiterating:

-

The gas limit is not binary “safe” or “unsafe” - it’s a spectrum, and it must balance safety and usability.

-

ETH design should make it easy for “many users” to run their own node, to protect users from the majority of hash or stake. Sync times should remain within the 12h-1d range.

-

the gas limit is very much a question of community values, and should be decided by the community. Technical arguments are critical, but we should be careful not to allow them to be used to sneak in personal opinions.

Open-Ended Questions

I think most would agree with Vitalik’s technical analysis (even if I think he slightly mischaracterizes the limitation at the network layer - propagation vs bandwidth - and I think @AlexeyAkhunov @vorot93 and the Arigon team might disagree with his storage/memory numbers).

However, there were a few points which remained open-ended, which are the reason for this discussion. Specifically:

- How the current gas limit is actually being set right now?

- Who should set it?

- How should it be set?

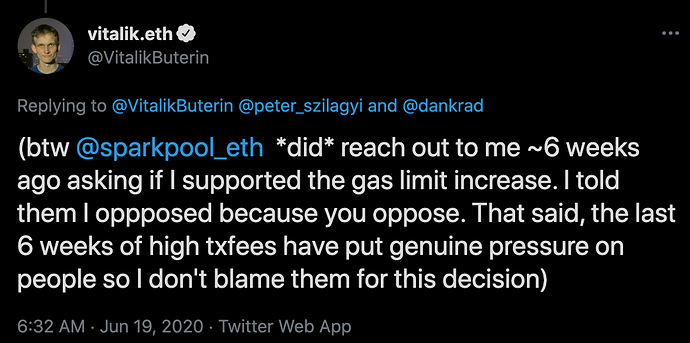

First, to the one point I think Vitalik was completely off (partially due to simplification, partially due to not being in close touch with the smaller mining pools).

Vitalik outlined how:

- Miners adjust the gas limit with every new block they mine, pushing it up or down by up to 0.1%

- Miners listen to core devs (see 2016 Shanghai DDoS)

1. Miners adjust the gas limit

This is not how the gas limit is set in practice. The gas limit is being set by those constructing the blocks - the mining pools.

Tomato Tomâtoe, right? well, no.

Mining pools are much more concentrated, with the top-3 pools controlling 55% of the hashpower. Pools, especially the smaller ones, are mostly a DevOps operations, helping miners to reduce payout variance and remove their need to run nodes with great connectivity of their own. Pools all generally earn just 1% of the mining revenues, so they hold all the power but not the $.

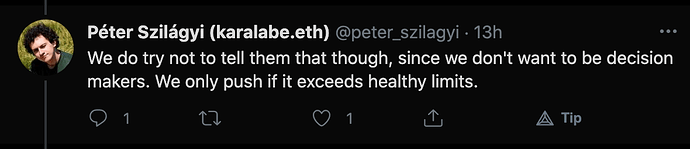

The gas limit is effectively set by the top-3 pools, and the rest of the mining pools just follow their lead. When we asked some of the top-20 pools why are they setting the gas to a certain level, they were surprised to learn that they control this parameter.

Let that sink in.

Obviously, someone from their tech team knows and adjusts the gas limit parameter, but as an entity most don’t know nor care to learn what the gas limit should be.

It is controlled by the top-3, with some push from Pools 4-6, but 40% of the hashpower just follows their lead.

Why is this so important that I’m draggin y’all thru the details?

a. Because it means the gas limit is currently controlled by 3 actors. In fact, two are so big as to have veto power - without them the gas limit won’t change since 40% are just followers - and we have seen a pool singlehandedly preventing the move to 15M gas for months, and unfortunately their incentives are misaligned with the long term success of ETH

b. Pools hold immense power, but capture relatively little income, and can be “persuaded” relatively cheaply - about $1-2M.

Whenever I say this everyone jump and say “but if the pools push the gas limit to dangerous limits they will just get forked by the devs” which is obviously true.

But I’m not talking about raising the gas limit to 80M, I’m talking about moving from 12.5M to 15M gas. If tomorrow 5 pools push it to 17.5M gas, and in 3 months to 20M - would you know if I bribed them? Or Alameda, Wintermute, CMS, 3Arrows, Multicoin, Parafi or any of the other major DeFi trading firms for whom $1-2M is pocket change?

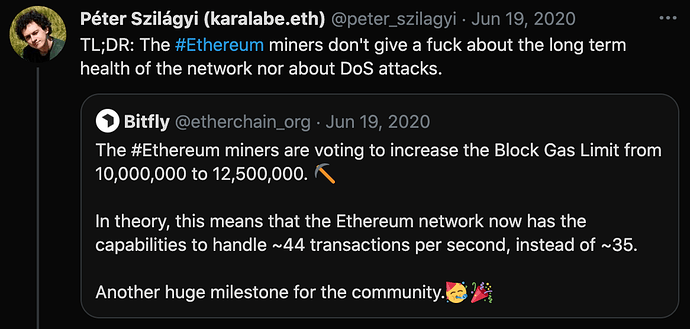

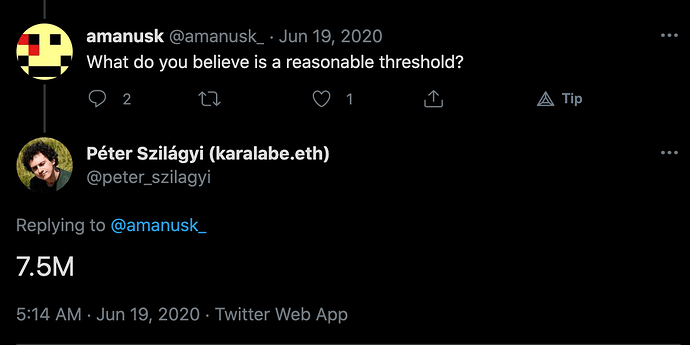

2. Miners listen to core devs

They don’t.

Sure, they will if there’s a catastrophe, an obvious malicious behavior, or an immediate danger. They are already walking on thin ice with the community and everyone knows it.

but do they follow the gas limit core devs tell them? no ![]()

We shouldn’t blame them though - different core devs have different opinions, and it’s very hard for them to gage what the community actually wants.

Discussion

So who should decide the gas limit? I think everyone (Vitalik and Peter included) believes it shouldn’t be the core devs - that it should be “the community”.

But that doesn’t really say much… If I’m running my own ETH validator, what should I set the gas limit to be?

I don’t know what the different core devs think, using the defaults is the same as letting the core devs set it (which nobody wants). and how do we pass the decision from mining pools to the community?

I think that instead of hiding the question behind a giant “this is technical!” sign, the community should be presented with:

-

Insight to the different opinions among core devs.

-

explanations on budget implications: ETH can handle X if nodes run on $75 raspi, or Y if nodes run on $400 laptops, or Z if it requires $800 laptop to run a node. There will be disagreements on the assessment, and these will change over time due to improvements to HW and client implementations, but it would be a good starting point.

-

a way for the community to make its desires clear, and an incentive for pools to follow the community desires.

I know there are those who disagree with me, and I’d be glad to discuss where we diverge in our view of the current situation and the potential solution.