Real-time proving has arrived

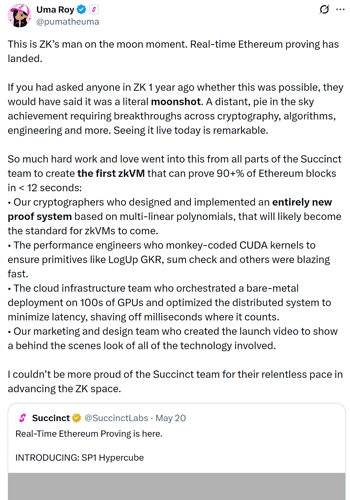

Last week, Succinct announced that they had achieved real time proving:

We should see this as an amazing success, and as the time the big bet Ethereum made on ZK is paying off.

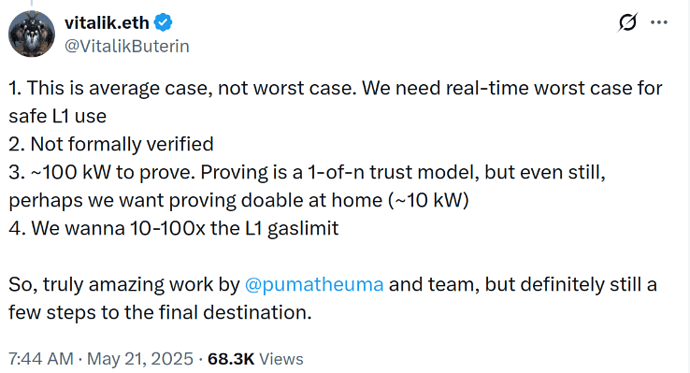

Can we scale the L1 as much as we want now? Is anything possible? For good reasons, we started discussing the limits: Formalizing decentralization goals in the context of larger L1 gaslimits and 2020s-era tech – in short: Even in a world where most nodes can relax and only verify data availability and execution proofs, we want to make sure that the production of these don’t become singular choke points for the network and so we must keep some limits on how powerful we allow them to be. A good guideline is that if some people are still able to run them from home, it becomes hard to maintain a global ban.

There is one type of node that can’t really be distributed: A stateful node that remains able to follow the state and compute updated state roots (for example RPC, but also as part of the proving pipeline).

Proving is a bit more interesting, because in principle it is highly parallelizable not just across a single machine but across globally distributed networks, even with limited bandwidth; think of just splitting a block into many miniblocks to prove them. However, there are calls to apply our limits directly to provers:

I think while these are good goals for the Ethereum network to have, we should “suspend” the strict decentralization limits for proving for the next few years. Provers have improved by many orders of magnitude over the last few years, with proving overhead improving from the trillions to just around a million today. We can expect several more orders of magnitude over the next few years (with @vbuterin believing it will ultimately be only a single digit overhead – almost a one million times improvement from today to go). This may take some years to materialize (and might ultimately depend on new types of hardware specialized in proving), but I suggest we should not repeat the mistake from the last few years: Let’s “suspend” the rules and be less strict about L1 provers for five years.

Why it’s ok to be more relaxed about proving

There are several good reasons why proving can be more relaxed than other parts of the stack:

1. Unlike other parts of the stack, scaling back does solve proving

One of the reasons we were always extremely careful about scaling the L1 was that there was “no going back” – yes you can lower the gas limit again, but the large state size and its downsides remain. However, there is no such “ratchet effect” for proving. If we scale to 3 gigagas, and a global regulatory attack on provers happens, we can go back to today’s gas limit, and even though the state has grown, this does not really affect proving (except for slightly larger state witnesses, but logarithmic growth is manageable in practice). The provers are not a very effective choke point.

In fact, we could design our consensus so that it locks in transactions before proving – the only thing the attack could do would be slowing down, a graceful degradation.

2. Proving can be ultra-parallelized

Should the vision of “single-digit overhead proving” not materialize, there are still other ways to make sure that we won’t have prover chokepoints. At the cost of stlightly increased latency, we can distribute proving across tens, hundreds or even thousands of machines. While it would not be a strict “one out of n” honesty assumption on these, it would still be an honest minority assumption.

Let’s not repeat our previous mistakes

Proving at scale is a huge win for Ethereum. It’s an industry that was bootstrapped both by many community investments and the rollup-centric roadmap. Not using these powers now that we have them would be our biggest mistake yet.

While we would probably be in a better place if we had decided on a moderate scaling L1 roadmap of ca. 10x in 2021, we understandably did not go for it: At the time, 10x did not seems to be enough of a factor to matter, and it wasn’t clear how to continue from there. Yet, we are paying dearly for this, as Ethereum would have probably stayed much more competitive over the last few years had it continued to make strong investments in L1 engineering.

We should not repeat this mistake now by choking scaling again due to concerns about prover decentralization. Prover centralization, for all the points mentioned above, is different: (1) It probably won’t last, (2) it’s not permanent (we can scale back), and (3) if push comes to shove we can slightly increase latency and distribute them.