After writing my previous post Eth1 architecture working group, I have been thinking a bit about how to kick-start this group and what process should we have. What I did not want to have:

- Vanity Q&A calls or lectures of “experts” educating the crowds

- Lots of chatter or calls without substantive documents and code to work on

- Group relying on a one-two individuals doing all the work and everyone else being observers

To try how this will work (or not work), I would like us to start solving a concrete architectural task. Solution of this task can enable a host of practical applications, and will be a good test of how such group can work. We might also conclude that it simply does not work unless we just hire people and set them to work (which is my current suspicion).

Here is the first task.

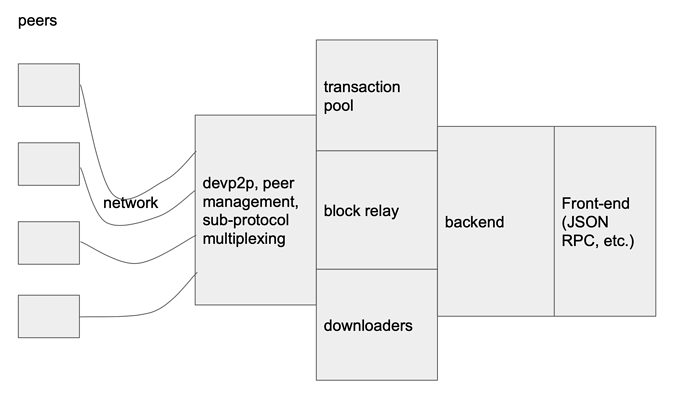

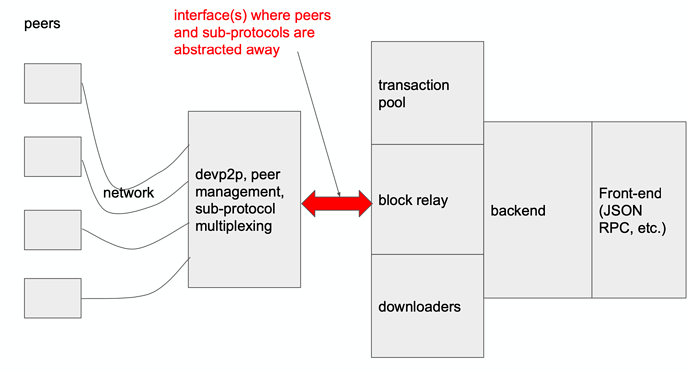

On the first diagram we have a very rough architecture of eth1 client. The important thing to note is that transaction pool, downloader, and block relay all communicate with the peers via a “networking stack”. That stack includes a few “layers” and concerns, but pervasive theme I found in the code is the proliferation of the “peer management”, which, in go-ethereum code, for example, translates into having at least three entities called peer in different packages (p2p package, eth package, and eth/downloader package). I wonder if the concern of peer management can be abstracted away into a separate component (and this component perhaps even separated into a different process). In order to do that, we need to devise a good interface (and protocol) that would connect these parts, something like that:

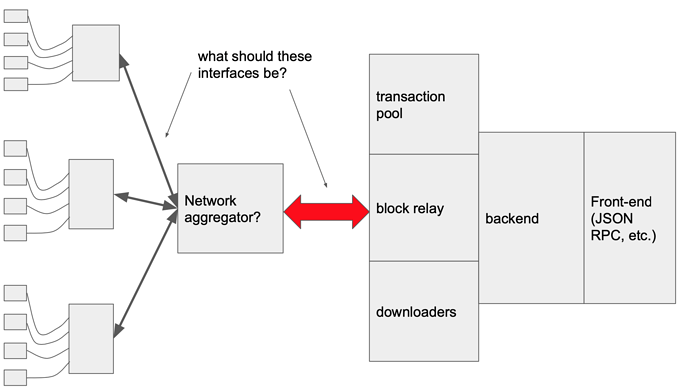

There is also a potential (and some interesting uses) of the aggregator component, which would allow to connect a single node to multiple networks, like this:

I hope I gave enough info about the problem and what is desired (I am cautious of not overcommitting to this effort unless I see solid participation), but if not, please ask questions in the comments. Please also let me know if you think this is a wrong first task for the eth1 architecture group, and if so, what would be a better starting task.

I would like participants to make concrete proposals (in any form) about the design of the interfaces - what functionality should the interfaces allow, how can peer management be abstracted away without taking away crucial functionality, etc. Depending on how many proposals there are, we will decide later on the review process, and schedule a discussion.