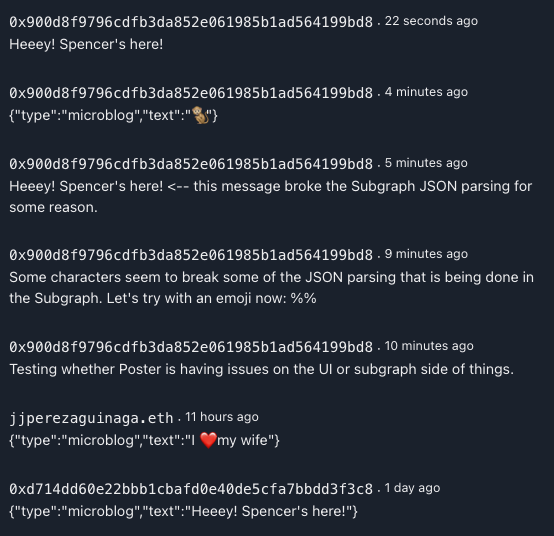

Indeed, right now the latest subgraph does not consider any of the specifications provided in the original post. This is (now) being tracked in the following issues:

After sleeping through it and giving it some thought (on top of the questions given by @ezra_w), I now see that the most viable solution is indeed building the entire state in the subgraph based on on-chain data as originally suggested. Here’s why:

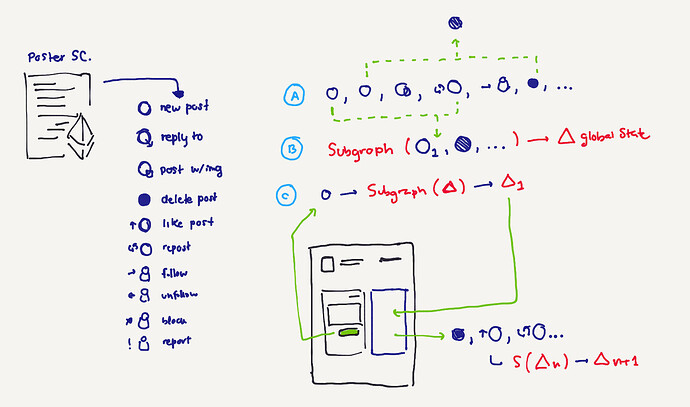

All actions sent via Poster (A) can be parsed in a single run by a Subgraph (B), generating a global state. New actions will only need to update the delta of the global state, always showing the latest state.

In short, by keeping everything on-chain, we can always build the global state via the subgraph and allowing minimal operations on the client, with minimal overhead. Furthermore, we can display chunks of the global state in a granular way, without having to rebuild the state for a particular view (e.g. my likes). And last but not least, composability; the subgraph in charge of the entire social network can be composed on top, allowing anyone to create sub-networks or other products on top of the whole thing. It’s like if Twitter entire content would be open source and ready to compose on.

So now we are on the same ship, and will move on with the UI modifications  @ezra_w since you showed some interest in the subgraph part, are you up for modifying the existing one to allow building the needed state? I’ll tackle that next after I’m done with the UI, but would be good to get some help there.

@ezra_w since you showed some interest in the subgraph part, are you up for modifying the existing one to allow building the needed state? I’ll tackle that next after I’m done with the UI, but would be good to get some help there.

P.S. I actually reached the Ceramic team and got interesting insights, in particular:

- The cost of every single operation (ie every write needing some gas), and

- The lack of a double-spend or global consensus need in a content publishing platform.

The first one I could argue L2 layers can tackle that, not to mention that we could put some mechanisms in place to diminish or offset the cost of publishing (e.g. via OpenGSN relayers). An example is how Mirror requires $WRITE tokens to publish, but we can open that pandora box later.

The second aspect is indeed true, assuming content has no value, which I could argue it has, so agreeing on who wrote what when first does require a global consensus. Furthermore, streams (their append-only data model) would require composing the global state somewhere, forcing clients to do that overhead every time and/or via some trustable gateway, killing decentralization.

In short, I love the Ceramic project but I do not think it fits our current use case at the moment. I’ll still explore it for IDX to allow enriching the profile data, but will do it in a separate fashion to Poster.

Back to building!