At the time of the Muir Glacier hardfork, I committed to proposing an update to the Ice age that is easier to predict and clarifies the intended function of the Ice age. More is written here https://eips.ethereum.org/EIPS/eip-2387

The following is the first step to fulfill that commitment. The PR is here https://github.com/ethereum/EIPs/pull/2515 and the draft is as follows.

Simple Summary

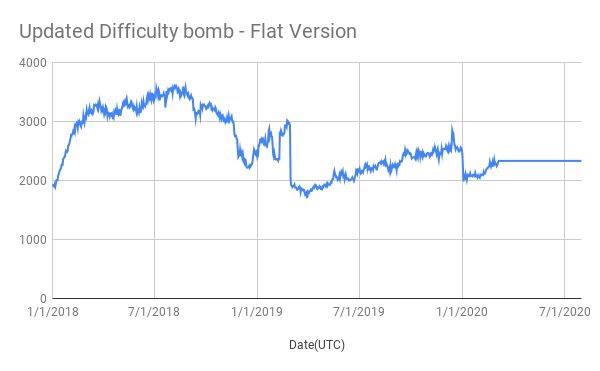

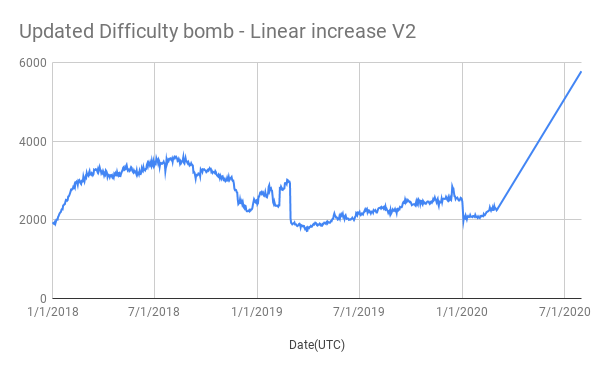

The difficulty Freeze is an alternative to the Difficulty Bomb that is implemented within the protocols difficulty adjustment algorithm. The Difficulty Freeze begins at a certain block height, determined in advance, and freezes the difficulty. This does not stop the chain, but it incentivizes devs to upgrade at a regular cadence and requires any chain split to address the difficulty freeze.

Abstract

The difficulty Freeze is a mechanism that is easy to predict and model, and the pressures of missing it are more readily felt by the core developers and client maintainers. The client maintainers are also positioned as the group that is most able to respond to an incoming Difficulty Freeze.

Motivation

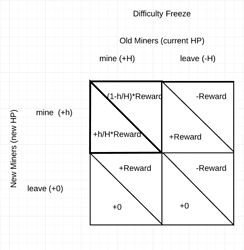

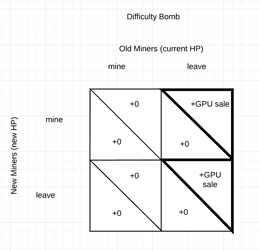

The current difficulty bombs’ effect on the Block Time Targeting mechanism is rather complex to model, and it has both appeared when it was not expected (Muir Glacier) and negatively affected miners when they are not the target (in the case of delaying forks due to techincal difficulties). Miners are affected by a reduction in block rewards due to the increase in block time. Users are affected as a function of the usability of the chain is affected by increased block times. Both of these groups are unable on their own to address the difficulty bomb. In the case of the Difficulty Freeze, the consequences of missing it are more directly felt by the client maintainers.

Specification

Add variable DIFFICULTY_FREEZE_HEIGHT

The logic of the Difficulty Freeze is defined as follows:

if (BLOCK_HEIGHT <= DIFFICULTY_FREEZE_HEIGHT):

block_diff = parent_diff + parent_diff // 2048 * max(

1 - (block_timestamp - parent_timestamp) // 10, -99)

else:

block_diff = parent_diff

Optional Implementation

Add the variable DIFFICULTY_FREEZE_DIFFERENCE and use the LAST_FORK_HEIGHT to calculate when the Difficulty Freeze would occur.

For example we can set the DFD = 1,800,000 blocks or approximately 9 months. The Difficulty Calculation would then be.

if (BLOCK_HEIGHT <= LAST_FORK_HEIGHT + DIFFICUTLY_FREEZE_DIFFERENCE) :

block_diff = parent_diff + parent_diff // 2048 * max(

1 - (block_timestamp - parent_timestamp) // 10, -99)

else:

block_diff = parent_diff

This approach would have the added benefit that updating the Difficulty Freeze is easier as it happens automatically at the time of every upgrade. The trade-off is that the logic for checking is more complex and would require further analysis and test cases to ensure no consensus bugs arise.

Rationale

Block height is very easy to predict and evaluate within the system. This removes the effect of the Difficulty Bomb on block time, simplifying the block time targeting mechanism.

Backwards Compatibility

No backward incompatibilities

Security Considerations

The effect of missing the Difficulty Freeze has a different impact than missing the Difficulty Bomb. At the point of a Difficulty freeze, the protocol is no longer able to adapt to changes in hash power on the network. This can lead to one of three scenarios.

- The Hash rate Increases:

Block Times would decrease on the network. At the same time uncles would increase. At some point the blocktime would be short enough that clients would not be able to sync fully. - The Hash rate decreases:

Block times would increase. - The Hash rate stays the same:

No change to Block Time

Clients are motivated to have their client sync fully to the network and so are very motivated to keep this situation from occurring. Simultaneously delaying the Difficulty Freeze is most easily implemented by client teams. Therefore the group that is most negatively affected is also the group that can most efficiently address it.

Economic Considerations

Under the current Difficult, Bomb issuance of ETH is reduced as the Ice Age takes affect. Under the Difficulty Freeze, it is more likely that issuance would increase; however, clients are motivated to prevent this and keep clients syncing effectively. This means it is much less likely to occur.

It is also easy to predict when this change would happen, and stakeholders who are affected (Eth Holders) can keep client developers accountable by observing when the Difficulty Freeze is approaching and yell at them on twitter.