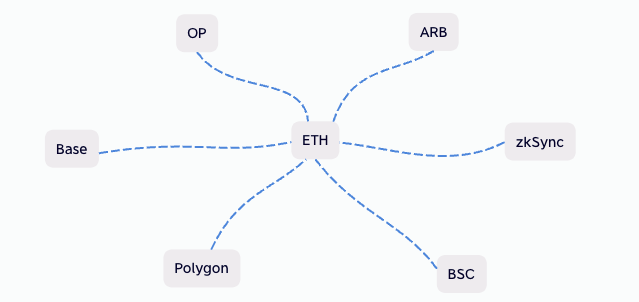

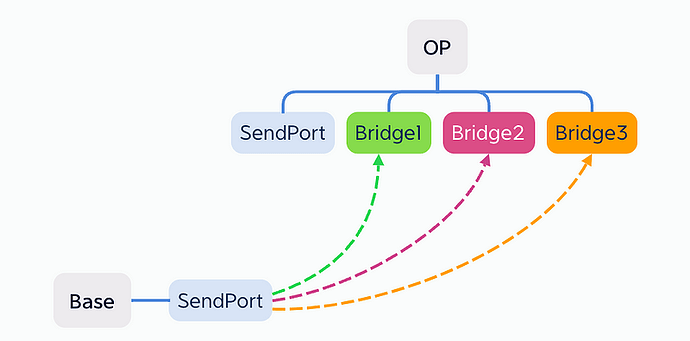

Nowadays, there is an increasing number of Layer 2 (L2) solutions, and official L2 implementations typically act as bridges to Layer 1 (L1). It appears as follows:

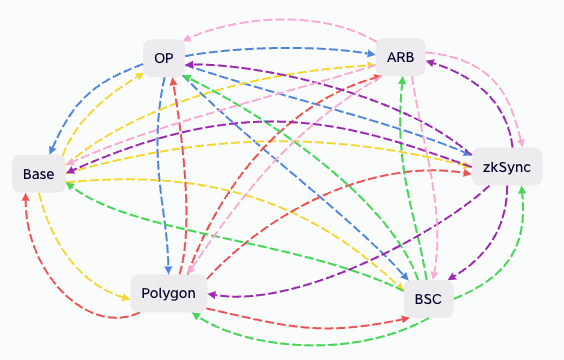

If we want to achieve full interoperability among all Layer 2 solutions, it would be as follows:

For each blockchain, it needs to establish cross-chain connections with five other blockchains. If there are n blockchains, then we would require n * (n-1) cross-chain bridges. (Note: What is commonly perceived as a single cross-chain bridge actually encompasses two separate bridges, one for A to B and another for B to A).

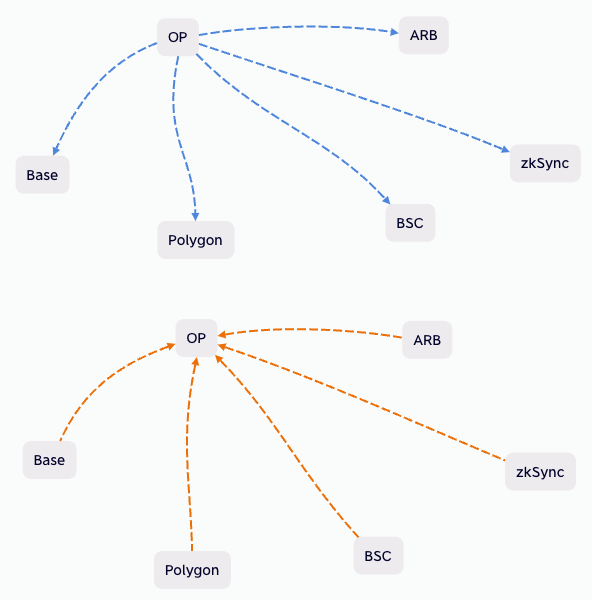

Cross-chain bridges essentially relay information from Chain A to Chain B. To optimize the cross-chain process, we propose a shift from a push mechanism to a pull mechanism. Each blockchain would consolidate data from the other five blockchains and write it as a single transaction on its own chain. This approach significantly reduces the number of required cross-chain bridges.

With n blockchains, only n cross-chain bridges are needed.

Suppose there are 6 blockchains, originally requiring 30 cross-chain bridges, but now only six are needed.

Suppose there are 10 blockchains, originally requiring 90 cross-chain bridges, but now only ten are needed.

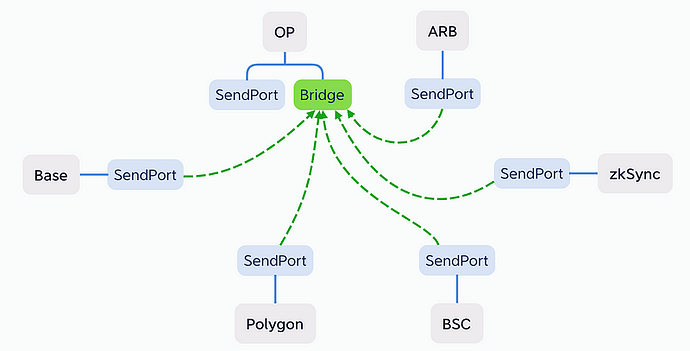

There is no cross-chain bridge that can guarantee 100% security!

By dividing the cross-chain bridge into SendPort and Bridge layers, we can ensure that at least SendPort is 100% secure.

SendPort, the smart contract acting as a data port, securely stores the data to be transferred across chains. As a decentralized and autonomous public infrastructure, it operates at the protocol level, ensuring a high level of security.

Bridge, on the other hand, represents the various cross-chain bridge projects. Each project retrieves data from the same SendPort and transfers it to their respective Bridge smart contracts. The data transfer methods employed may include fraud-proof mechanisms, multi-signature schemes, zero-knowledge proofs, and other secure techniques.

As illustrated above, the three cross-chain bridge projects are all retrieving data from the same SendPort. Consequently, the data transported to their respective Bridge contracts should be identical. To enhance the security of cross-chain transfers, we can implement a “2 of 3” rule, even if 1 bridge be hacked, the cross-chain network still work, significantly improves the overall security of cross-chain operations.

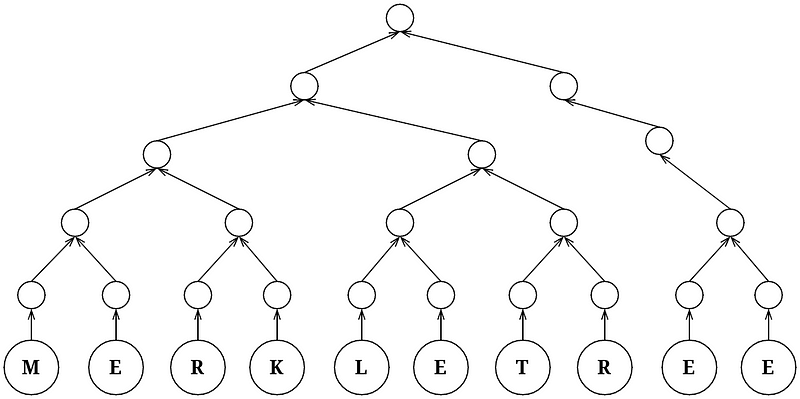

For efficient data transfer, the recommended approach is to utilize a Hash Merkle Tree.

We send the hash of the data that needs to be cross-chain to the SendPort contract, which automatically generates the Merkle Tree. Only the Root Hash needs to be transported to verify the entire cross-chain data on the target chain. The Root Hash is very small, which is why we can consolidate cross-chain data from 100 chains into a single transaction for transportation to the target chain.

Hitchhiking becomes feasible. You can send the cross-chain information to the SendPort, which is free of charge, and then wait for the cross-chain bridge to transport it, without incurring any additional transportation costs. Finally, you can verify the cross-chain data on the target chain, which is also free of charge. In other words, you have hitched a ride.

By utilizing the aforementioned “2 of 3” rule, you can trust the cross-chain network, which is also free of charge.

I don’t know if there is a similar EIP, if no, can I propose this?