Thanks for taking the time to explore this!

Copyright Issues

Ah, excellent context — thanks! That’s a pretty horrendous situation for a community project to be in  The copyright for such a foundational component of this project absolutely should have been released much earlier, but that’s neither here nor there.

The copyright for such a foundational component of this project absolutely should have been released much earlier, but that’s neither here nor there.

It sounds like there’s a solve now, though, so that’s great

Unnamed Sources

My original source was the controversial semi(?) closed discussion in Prague, where it was brought up by someone else (I can’t remember who for the life of me). The Twitter Thread has a number of supporters, as well as some of the folks in this thread (including you

).

).

I agree that there is no strong consensus on this change!

I really enjoyed the IETF’s “rough consensus and running code” concept that came up at the EM Council of Prague. In the Jello Paper we have a more complete & working spec, and support from a number of people in the know. The question becomes if this is a “over my dead body”, and why if so?

The Yellow Paper is Dead, Long Live the Yellow Paper

Wonderful! Let people use whichever notation they like, and let a thousand flowers bloom! But even then, why not make the canonical spec for EIPs the formally verified executable one? People can still translate concepts into other notations, or have then generated directly by the K Framework. This was the intent of creating the Beige Paper by hand (since the Yellow Paper was too obtuse for most people), but the canonical spec was still assumed to be the YP.

One such setup could look like this:

Jello Paper

(canonical spec)

|

|

+------------------+---------------------+

| | |

| | |

Yellow Paper Beige Paper BNF & Proof Trees

(historical) (accessible) (mathematicians)

The Comprehensibility of K

I actually strongly disagree on needing formal training to read K

I’m not sure that I’d call K a “formal notation”. It is very much a programming language, and generates both executable code and documentation (conceptually in whatever notation you would like). This reads in a way not unlike modern Javascript’s arrow functions with destructuring, and consuming a K-based spec requires no additional study in PLT or specialized mathematical knowledge than any other programming language. Presumably you would like the writers of the spec in any notation to have some relevant background, though they may or may not be experts in K (I certainly am not one!)

For reference on how much more familiar K is than operational semantics, see the illustrative examples below

Example of K (from Jello Paper)

syntax UnStackOp ::= "POP"

rule <k> POP W => . ... </k>

syntax StackOp ::= DUP ( Int ) | SWAP ( Int )

rule <k> DUP(N) WS:WordStack => #setStack ((WS [ N -Int 1 ]) : WS) ... </k>

rule <k> SWAP(N) (W0 : WS) => #setStack ((WS [ N -Int 1 ]) : (WS [ N -Int 1 := W0 ])) ... </k>

syntax PushOp ::= PUSH ( Int , Int )

rule <k> PUSH(_, W) => W ~> #push ... </k>

Formal Notation

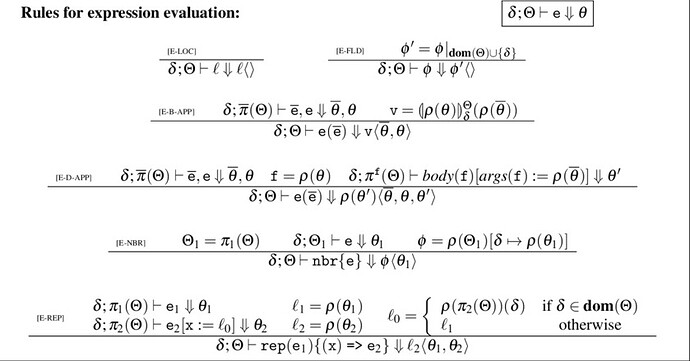

This is what a common formal notation looks like:

Call For “Over My Dead Bodies”

Correct me if I’m wrong, but you [Greg] are not an “over my dead body”. I would love to hear directly from people that are dead set against this idea, and why that’s the case for them! If you could point the people that you’re talking to at this thread, that would be very helpful!

If you have any experience with the functional paradigm in Haskell or even Javascript, it is very readable, and even if one doesn’t, it is very easy to get into.

If you have any experience with the functional paradigm in Haskell or even Javascript, it is very readable, and even if one doesn’t, it is very easy to get into. PM me / I’ll reach out and we can see about organizing.

PM me / I’ll reach out and we can see about organizing.